The artificial intelligence landscape was turned upside down less than a year ago with the launch of chatGPT. The AI world is moving faster than ever, especially with the emergence of generative AI. Last year we published AI for Rookies, an introduction to technology (below), and now it’s time to update it with 3 new concepts.

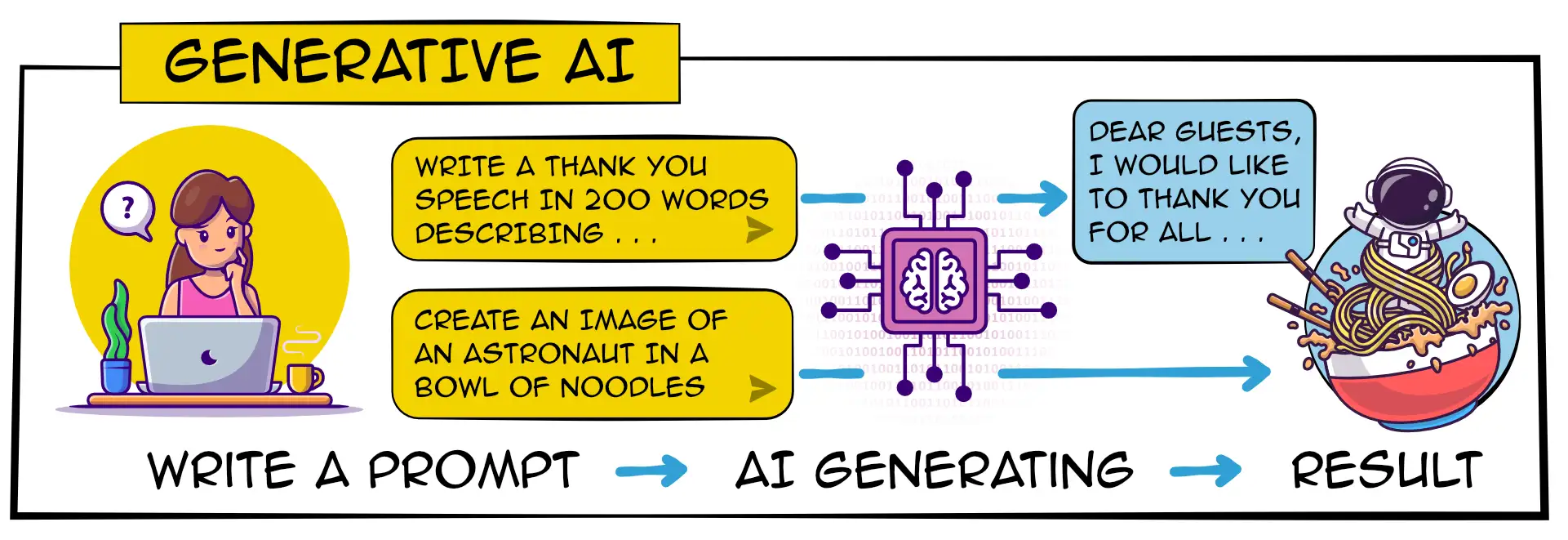

Generative AI

The Generative AI is a field of Artificial intelligence that create (generate) original content in response to a prompt. Generative AI can generate different types of content such as text, images, video, code, music, molecule or even a mix of different contents. Algorithms are trained on very large datasets and learn the underlying patterns and structures. Then the AI model can generate a new content based on similar pattern and can be sometimes very creative and surprisingly human-like and realistic.

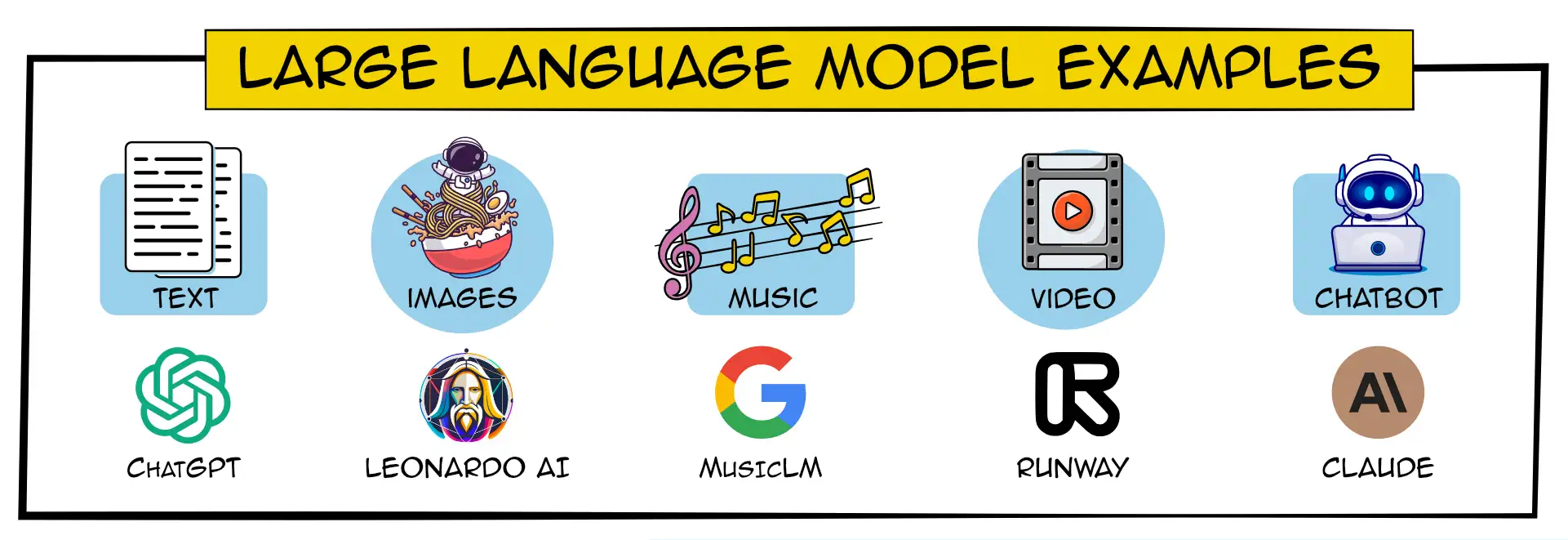

Large Language Model (LLM)

Large Language Model (LLM) is a language-based model that is trained on a massive dataset of content and amount of parameters: It’s like a super-smart language robot that has been trained on a massive amount of text from the internet and other sources. These language models use complex algorithms and deep learning techniques to analyze patterns in the text data they’ve been exposed to. This allows them to grasp grammar, vocabulary, and even context. Some most well-known LLM are GPT-4, Claude, BERT, LLama 2, PaLM. Large Language Models are used for a wide range of tasks, such as answering questions, language translation, writing assistance, chatbots, visual creation, research and much more.

ChatGPT and other Generative AI applications?

ChatGPT gives this definition of itself: “ChatGPT is a language model developed by OpenAI, designed to engage in human-like conversation and generate text-based responses by understanding and processing natural language inputs.”

Since OpenAI disruption less than 12 months ago with a public release of ChatGPT ensuring one of fastest adoption rate in history, many companies have been issuing their generative AI solutions and applications for text (ChatGPT, Bard), images (MidJourney, Dall-E), video (Stable AI, Runway ML) or music (MusicLM). The list is too long to names them all here, but all big tech companies such as Google with BARD, Microsoft with Copilot and $10B of investment in Open or Meta with LLaMa and a galaxy of start-ups and scale-ups are working on changing the world of AI.

AI for Rookies, an introduction to technology

Voice assistants, autonomous cars, connected cities, our daily lives are now paced by technology, especially Artificial Intelligence, Deep Learning and Natural Language Processing. But what is really behind all these words that are as fascinating as they are worrying? Here is a glossary of terms to help you understand what they are all about.

Artificial Intelligence

Originated in the 1950s, Artificial Intelligence is a set of techniques designed to enable machines to reproduce human functions and behaviours, such as recognising images, understanding human language or managing automated operations.

Very early, it was thought that it would be possible to create machines capable of thinking by themselves and then would become more “intelligent” than humans. Many difficulties have prevented the scenarios of science fiction movies from becoming reality yet, but thanks to the recent development of Machine Learning and Deep Learning, a new era of Artificial Intelligence is now opening up.

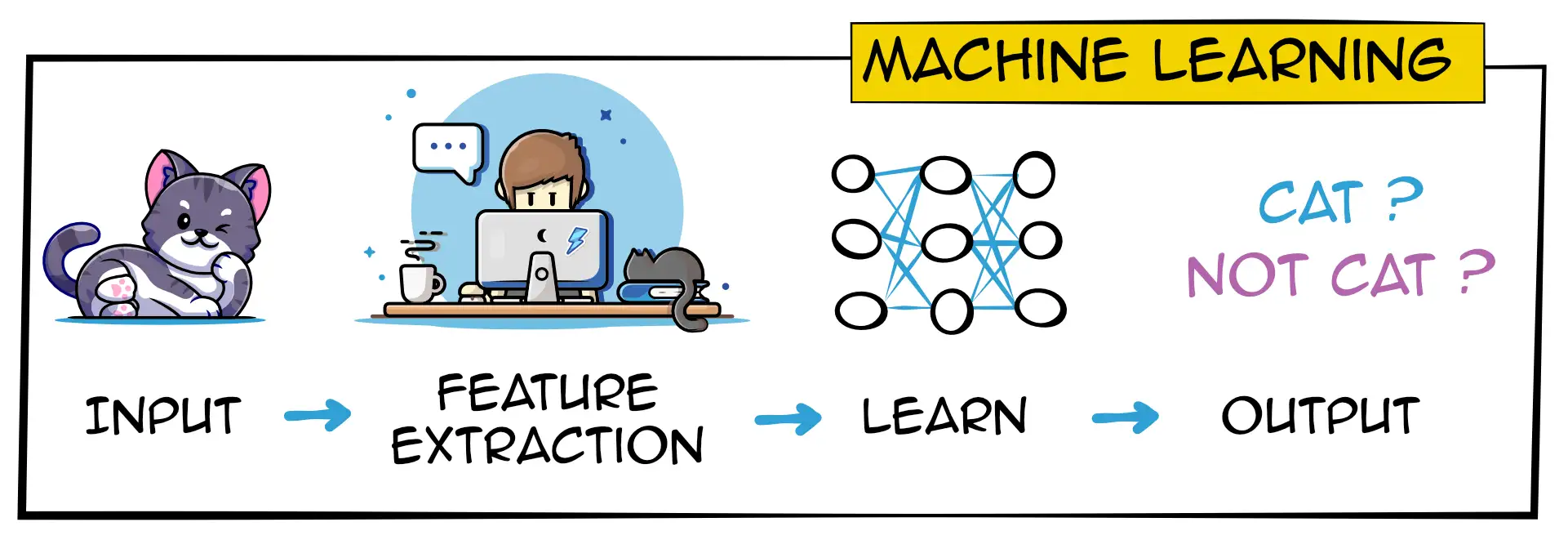

Machine Learning

Machine Learning is a technique of automatic learning to teach a machine how to process a huge volume of data and organise it. The aim is to generate an automated answer from new data that has never been processed before

Machine Learning can be divided into 2 categories:

Supervised learning: This is learning from a batch of data, previously labelled by a human, the model learns as if it were learning by heart.

Unsupervised learning: This is learning from a raw database without prior labelling. It relies on algorithms capable of grouping and classifying data with common characteristics or structures.

Previously, it was necessary to code 100% (and thus foresee all possible cases) of a program (resulting in uncontrolled decision trees). Machine Learning has opened new perspectives because it allows programs to be more intelligent and machines to be more useful. However, we are still a long way from machines that fully learn by themselves, notably because of the limited volume of available data and computing capacity yet.

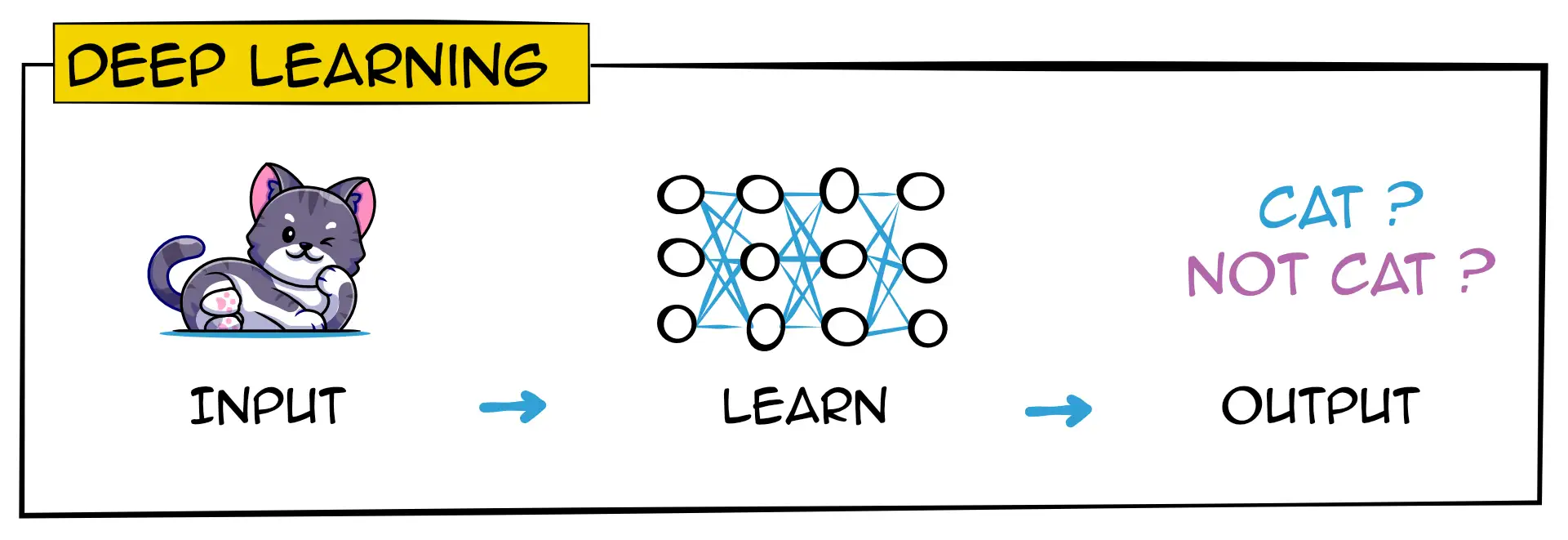

Deep Learning

Deep Learning is a field of Artificial Intelligence, which stems from Machine Learning and is based on artificial neural networks.

Thanks to the soaring of computing power and the availability of data, the performance of algorithms has experienced an enormous boom recently.

Deep Learning can autonomously proceed a huge volume of raw data and is capable of generating an autonomous classification, enabled in particular by complex neural network architectures.

Applied to language processing, for example, Deep Learning is a massive shift in this field of technology. The performance of algorithms using Deep Learning, which is far superior to Machine Learning, now makes possible to proceed higher amount of data resulting in a better understanding of language to determine the implicit part or ambiguity of a sentence, in short: to understand the real meaning of a sentence.

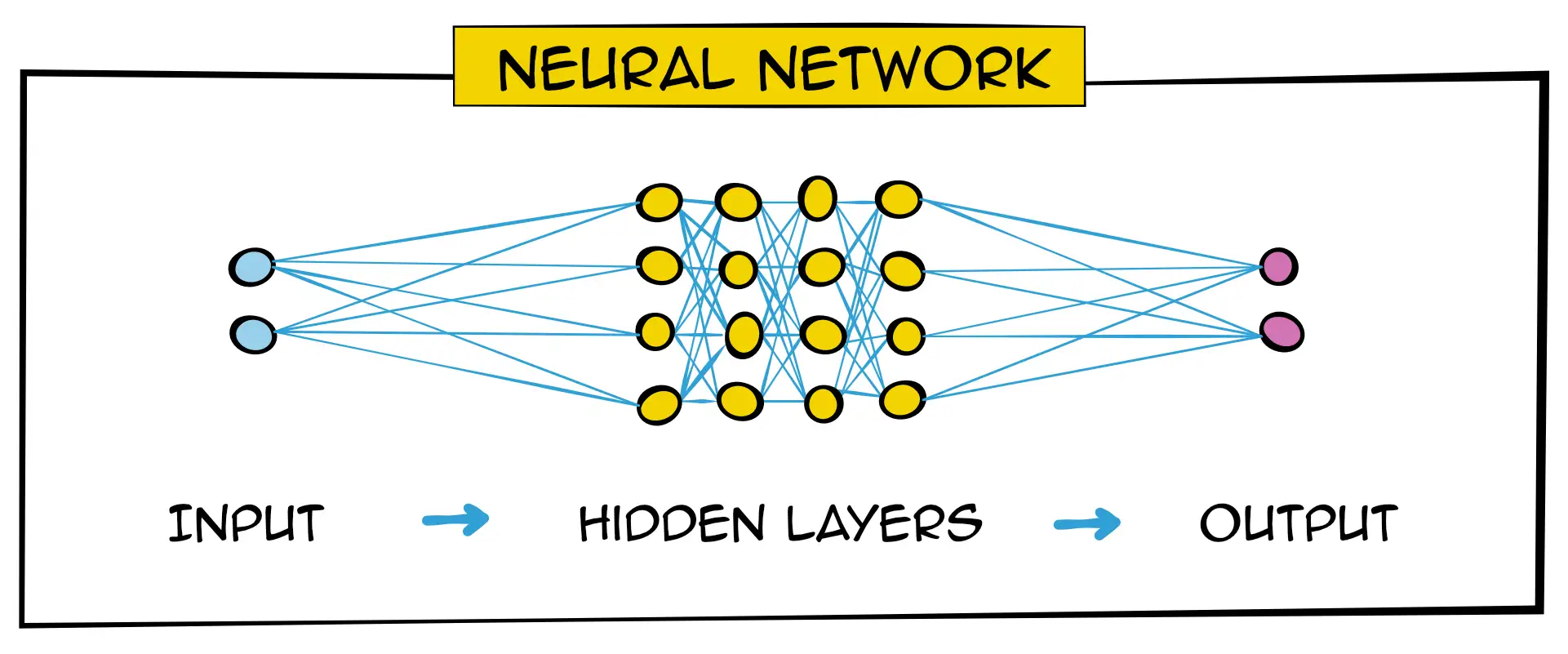

Neural Network

An artificial neural network is a mathematical and statistical system that is roughly based on the real functioning of neurons in our own nervous system.

An artificial neural network consists of layers of nodes (cells) with an input (from all cells in a previous layer) and an output (to all cells in the next layer). Each cell multiplies all incoming values by a number and then passes the result to all cells in the next layer.

This layered operation allows a much larger volume of data to be handled and has encouraged the emergence of Deep Learning.

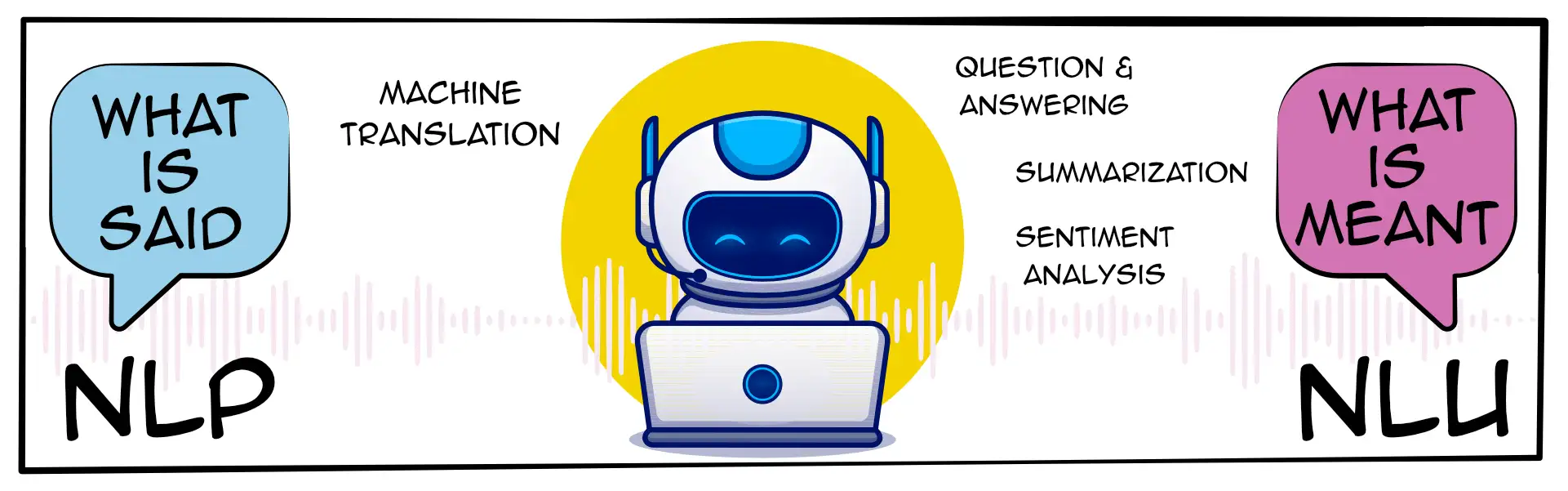

Natural Language Processing

Human language (whatever the language) is by nature imprecise, equivocal, ambiguous, whereas machines use a specific, standardised and structured language.

Natural Language Processing (NLP) comes to the rescue to enable a dialogue between humans and machines using human language. NLP is the ability of a machine to process natural language as it is written or spoken.

It is based on techniques at the crossroads of linguistics, computer science and mathematics. Combined with Machine Learning and Deep Learning, for example it allows the creation of a powerful linguistic model and thus automatically analyses spoken or written sentences formulated by a human.

NLP can convert data from language (unstructured by nature) into a machine-readable format (structured).

Natural Language Processing

Thanks to Natural Language Processing, machines can process and interpret words. However, spoken (and written) language is very complex, often implicit, ambiguous, and the meaning often depends on the context.

The new challenge is to enable machines to have a deep understanding of human language, in addition to understanding what we say, it is about to really understand what we mean. This is where Natural Language Understanding (NLU) comes in. This is a sub-field of NLP. Thanks to Deep Learning techniques, it is now possible to process much larger databases of a language to allow automatic analysis of the semantics and syntax of sentences formulated by a human to understand the real meaning. However, this technique requires a large database of the language and computing power to be really effective.

NLU is very useful for applications such as speech recognition. It becomes very important that the algorithm is able to interpret the true meaning of a spoken query to deliver a relevant response.

All of these technologies are used by Telecats to develop its solutions, such as AI Routing, with the guiding idea that artificial intelligence will not replace advisors but will instead help them on a daily basis to improve customer service.